No one can argue that our eyes capture the best visuals in the world. No matter how high-definition videos or photographs capture, nothing is more realistic than seeing the world with my own eyes. But the scene in front of my eyes doesn’t last long. It may leave you memory, but it is impossible to share the exact scene captured by your eyes to others transcending time and spaces. But it is human nature to pursue the desire of conquering the impossible. Such desire played a significant role in advancing higher definition resolution technologies that display more realistic photographs and more life-like videos with crisper and larger images.

What is a high resolution?

Resolution refers to the number of pixels in each frame that constitutes a digital screen. Pixel is the smallest element in a digital image. The higher the resolution, the more pixels there are. Therefore, when the two display models have the same screen sizes, an increased number of pixels raises pixel density, resulting in better picture quality.

The evolution of TV today goes hand in hand with the development of resolution. The number of pixels per screen was bumped up from SD (720×480) to HD (1280×720). And HD has once again evolved into FHD (1920×1080) and into higher resolutions of 4K UHD (3840×2160) and 8K UHD (7680×4320). Increasing the number of pixels in a set space means the expression of more delicate images. Even the same screen size becomes more legible with more pixels. In a nutshell, higher definition resolution allows screens to reproduce far richer details.

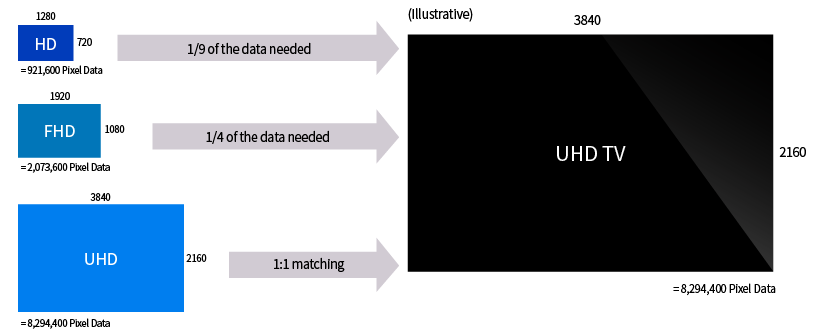

Hardware is not the only one that has a resolution. All digital contents on the screen have a resolution. If the content and hardware have the same pixel density, the resolution of the content matches that of hardware 1:1 so that viewers can get the most out of their screens. However, it is a problem when the resolution of the content is lower than that of the hardware. Then, what should be done to address such a discrepancy?

For instance, when playing HD images on a 4K UHD TV, an only one-ninth pixel will be used. If left unchecked, the other eight-ninths will have no image information, and thus a black screen will appear on the TV. However, display devices nowadays convert low-definition into high-definition to fill out the screen edge-to-edge. This conversion is called “upconversion” or “upscaling.” It’s like zooming in on a low-definition image. This method copies pixels from lower resolution images and repeats them to match the number of TV pixels. When upscaling HD images to fit on a UHD TV, an original pixel has to copy into nine pixels to fill out the UHD screen, and upscaling FHD images requires one original pixel to duplicate into four pixels. The result is an image that fits a higher resolution display, but there’s a limit to appear muted or blurry due to the cloned pixels.

Magic of converting low-definition images to higher definition images, 'AI upscaling'

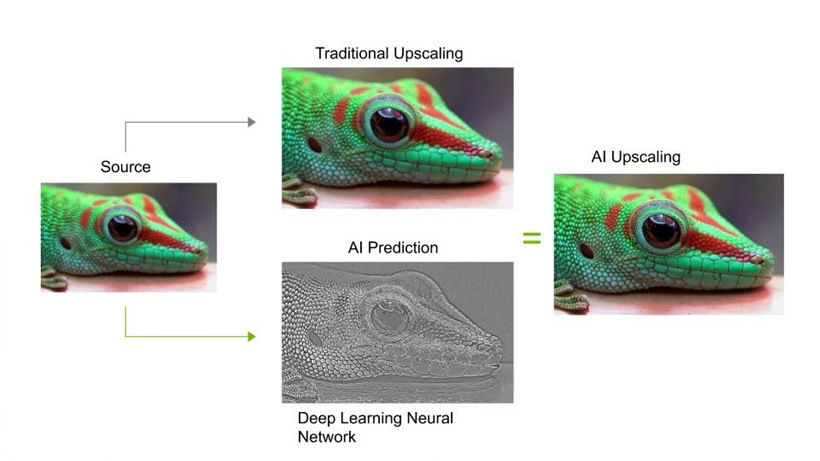

Years ago, TV manufacturers invented the technology of looking at adjacent pixels to determine what the new pixels look like instead of duplicating pixels. This technology allows images for sharper, more defined edges. Fast-forward a few more years, with the advance in upscaling technique, processors do not just look at neighboring pixels in each frame, but at multiple frames to look for motion, noise, and other factors to form an image. This requires computational tasks to determine the right amount of sharpness enhancement, noise reduction, and other factors, and AI can be of efficient assistance to these tasks.

AI upscaling uses deep-learning technology. When a low-resolution image is given to AI, AI upscaling technology takes a different approach and predicts "which original high-resolution image will look like this if it reduces to a low-resolution (downscaling)" Likewise, to predict the upscaling images with high accuracy, the AI model must be trained on countless images, and deploying a neural network model can boost efficiency. The more the AI model trains, the higher the resolution AI can upscale, producing incredible sharpness and enhanced details. Moreover, the model analyzes pixels recognized for effective upscaling and uses real-time inference after being trained on datasets or pictures of many popular TV shows and movies.

Samsung Electronics applied its machine learning-based AI upscaling technology on 8K QLED TVs to create the best viewing experience for 4K-mastered content. This technology allows TV to learn millions of video images in advance, analyze them by type, and automatically improves display resolution. Thanks to such deep learning capabilities, the TV itself finds the optimal correction filter to convert a low-definition video to a higher definition, classifies each scene depending on various picture quality factors, and adjusts the contrast ratio and sharpness by area in real-time to reproduce precise difference that the original creator intended.

The key factor of ultra-high definition resolution, 'Image Sensor'

▲ Samsung Electronics' mobile image sensor 'ISOCELL GN1'

(Image: Samsung Electronics Newsroom)

Upscaling is not limited to enlarging already created photos or videos. The image sensor, which is undergoing development to enhance visuals from the moment of shooting, also contributes to bringing higher definition images to life. An image sensor is one kind of semiconductor that converts light that enters the camera lens into electrical signals. It serves like a retina in the human eye. There are two main types of image sensors: Complementary Metal Oxide Semiconductor (CMOS) and Charge-Coupled Device (CCD). Since CMOS sensors have lower power consumption and image noise than CCD, they are now being adopted in many devices.

(Image: Samsung Electronics Newsroom)

Having as many pixels as possible into an image sensor can enhance the image quality of photographs or videos. Nowadays, manufacturers are trying to make smartphones or other digital devices more compact and lighter. Therefore, an image sensor that squeezes in as many pixels as possible in a smaller area while receiving more lights has a competitive edge in the market.

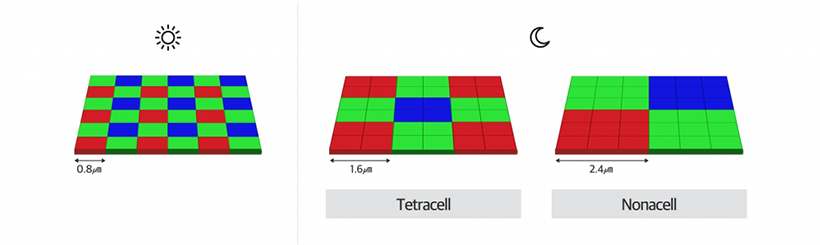

Smaller pixels can result in fuzzy or dull pictures because areas from which each pixel receives light get smaller. Samsung Electronics developed the 'ISOCELL Plus' technology to minimize light loss and interference between pixels by improving pixel optical structure. The name ‘ISOCELL’ is a compound word made from the word ‘Isolate’ and ‘Cell’. This technology places a physical barrier between neighboring pixels, isolating them and preventing the captured light from leaking out. By merging nine adjacent pixels, the sensor has a nine times larger area to receive light when in a low-light environment. When taking photographs in a bright environment, it can support the resolution as many as 108Mp.

One long exposure and multiple short images, High-definition images shot by a smartphone camera

The resolution, to put it in another way, means a high-definition image that captured a high level of sharpness and many details. In addition to pixel density, pixels and the composition of pixels also determines the image quality. Recently, smartphones adopted technologies that produce the best picture quality by fusing multiple shots taken.

Deep Fusion and Smart HDR featured in Apple’s iPhone 12 are cases in point. Apple’s Deep Fusion allows the phone camera to take multiple shots of the same scene at different exposure levels and composites each frame to select the best combination of exposures for the subject. iPhone’s camera takes nine shots before and after pressing the shutter button and one long exposure. Then, the technology looks through these shots, finds optimum pixels for lights and shades, adds details, and reduces image noise to complete the HDR image.

The 'Neural Engine', a machine learning-dedicated processor mounted on iPhone 12 series, analyzes and selects pixels suitable for optimal sharpness, color, lights, and shades, respectively. The ISP designed by Apple combines these pixels and creates the final version. By utilizing technologies such as deep fusion, even digital zoom can negate an increase in noise caused by stretched resolution.

The future of upscaling technology

Latest upscaling technologies are all evolving rapidly in tandem with the development of AI technologies. Convolutional Neural Network (CNN) is one of them. Recently, Convolutional Neural Network, an artificial neural network that shows excellent performance in image processing, is accelerating the development of image classification and processing technologies. In particular, the CNN model extracts elements such as dots, lines, and faces from the image and goes through countless training with these properties. Thanks to this training, the CNN model can make its own decision to restore lost details.

Image sensor, a factor that can enhance image quality from the filming stage, became more and more ultra-thin, and now it contains far more pixels. It has been reported that Samsung shipped image sensors with 150 million pixels this year and plans to develop image sensors with 600 million pixels in the future. Given human eyes perceive about 500 million pixels, Samsung is aiming to contain a greater number of pixels than human eyes.

The desire to share what you see to others may be the result of a desire to gain empathy. I look forward to beginning a new era where we can upscale subjective scenes created by an exquisite combination of emotion and reason into objective scenes with further advanced upscaling technology.

※ This article holds the editor’s opinion, and Samsung Display Newsroom’s stance or strategies are not reflected.