Suppose you were to go through your photos taken throughout the year. In that case, you’ll realize that even the same scenery looks darker and dull during winter. The hues and contrast may seem off in these photos. It may be due to the temperature difference, but the dust in the air can also impact the tones in photos. Clear blue skies seem to turn dusty grey in the winter. High levels of fine dust in consecutive days will most likely trigger fine dust reduction measures to limit the emissions of pollutants from fossil fuel power plants, factories, construction sites, and diesel vehicles. Having suffered from air pollution during the Industrial Revolution, Europe decided to ban the sale of vehicles with combustion engines from 2025. Since 2016, governments across Europe have been announcing a series of such measures. The exit of petroleum-run engines implies it will vastly restructure future transportation around electric vehicles (EV).

Electric vehicles are essentially moving electronics. In other words, an electric car, much like a smartphone, is a form of an electronic product. Approaching electric vehicles as electronic product rather than an automobile can make it easier to understand the concept of autonomous vehicles. Many cars today are already equipped with various autonomous driving functions, and fierce competition in developing substitution of human labor technologies exists. Autonomous driving means that a vehicle can make decisions on its own to move. The functions of human neural networks and muscles that have evolved over millions of years are now being replaced by electronic devices such as semiconductor chips and motors. What it boils down to is the fact that self-driving vehicles’ driving abilities depend on mimicking human visual perceptions.

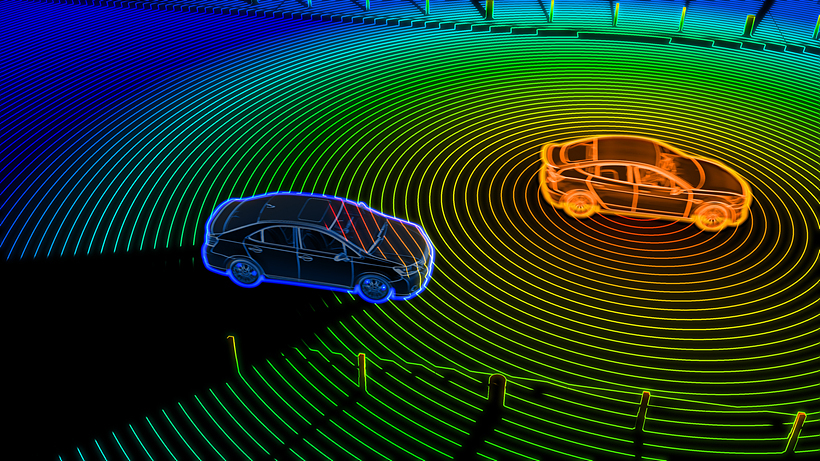

The radar-sensing device dubbed LiDAR tracks data of three-dimensional spaces, and as humans reconstruct what is visually perceived, fills the space of coordinates with digital data. But this critical digital data, considered the standard for Level 5* autonomous driving, does not include colors. It is merely a compilation of coordinates. Colors cannot be detected by a laser light source as they require a camera that is equipped with a color filter. Visual perception can be very complex to realize in self-driving technology as it requires considering the color data on top of the spatial data; fortunately for us, it is not so tricky. People can intuitively distinguish colors and generally have no issues dealing with various colors at once. This explains why there is a generally accepted color code for road signs, traffic lights, and vehicles for children - no matter where you go, a red traffic light always means “Stop”. On the other hand, machines have a more difficult time with simple color recognition, such as the complementary colors of red and green. Many factors - such as differences in lighting between day and night, changes in color tones due to the weather, reflective light from glass windows of buildings and cars, diffused reflection on a rainy night, and dusty atmosphere caused by yellow and fine dust - can affect machines’ color perception.

*Level 0 - No automation; Level 1 - Driver assistance; Level 2 - Partial automation; Level 3 - Conditional automation; Level 4 - High automation; Level 5 - Full automation (SAE)

Colors Needed for Collaborative Measures Between Robots and Humans

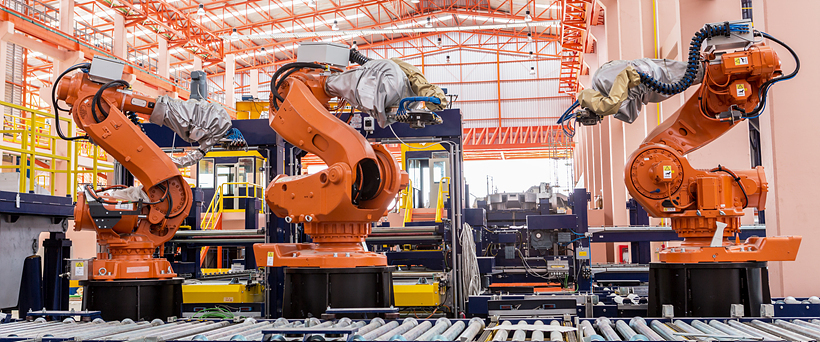

If you think about it, a self-driving car is a robot. It recognizes its surroundings and moves on its own. Robots also perceive spatial information, make judgments, and move through LiDAR sensors. Robotics engineering is an interdisciplinary field where all types of engineering technologies converge. While mechanical engineering covers the mobility of devices/equipment, computer engineering deals with sensing and determining the surroundings, and ergonomics are required to ensure adequate performance as well as collaborative measures with humans. Anytime a particular technology calls for human interaction, research converging different fields like cognitive science, psychology, and design is a must.

Since artificial intelligence doesn’t operate with body-like functions, it only requires data. On the contrary, robots need more as they physically move in different spaces. Many autonomous vehicles have been in existence for a while. Still, you’ll notice very few robots capable of taking walks with humans. Whereas we envision flying, transformative robots saving lives from all kinds of disasters in movies/cartoons, robots in real life struggle to step out of the lab on their own. Robots need to be connected to a myriad of equipment and wires, accompanied by multiple researchers to move from one point to another: Tiny variations, such as walking on a sunken sidewalk block or stepping onto a curb, require additional data calculations, adding to the strenuous effort in ‘moving.’ Robots are generally slower and clumsier than humans when it comes to acquiring and calculating visual information. A tremendous amount of energy is required when cross-checking coordinates with spatial data to set a new route in real time. In addition, a dilemma often arises as the number of batteries is limited for the weight distribution relative to the electrical energy. This is why autonomous vehicles driving at a fast speed on the road while making a real-time decision every second based on the perceived visual information is an incredible feat.

With visual cognition being the most dominant sensory system for humans, it’s no surprise that our world mainly consists of visual information. Even what our minds unconsciously take in and decipher carry visual data such as color. Color exists everywhere - from the views of the earth from space to the red spikes of COVID-19 under a microscope.

Even in grayscale imaging like ultrasounds, humans create visual rules and add color to highlight key traits vividly. Such color systems, which we take for granted, are entirely unfamiliar to most robots. In a world inundated with colors, significant effort is required to extract relevant data for robots. Distinguishing grapefruits, oranges, and tangerines is still a challenge for robots. Classifying images of cats from dogs has been a relatively recent development in artificial intelligence. Cross-checking forms and colors to identify similarities/differences and generating numeric values for attributes to make comparisons with the baseline numbers are not only complex but also highly erroneous when affected by factors such as the camera angle, obstacles, lighting conditions, and reflective light.

Therefore, for a large number of robots, including autonomous vehicles, monochrome or colors with strong contrast in a simple form are easier to process. Engineers who design control systems prefer conditions with less noise and errors. In order to prepare for a future where we coexist with robots, roads and streets must be restructured with lanes and signs marked in vivid colors and buffer zones that separate the spaces for high performance machines and humans. Streets must be paved with seamless grouts and must eliminate obstacles. Such areas are ultimately safer for humans — human-centric approaches are necessary for coexisting with machines.

Color Displays for the Future

The world, according to machines is a compilation and flow of big data. At the same time, humans see colors through data using technology. As seen in Isaac Newton’s 17th-century discovery of rainbow colors in the misty light that passed through a tiny hole in the wall and painter Johannes Vermeer’s use of the camera obscura to trace images projected from the device on a piece of paper, colors not only influence science and art but also have a significant impact on industries and leisure activities. Historically, artists searched for higher quality pigments while chemists continued experimenting with mixing newly discovered materials to reproduce bright and highly-saturated colors.

The fact that color photography was invented around the same time when black and white photography was introduced suggests how strong the human desire was to reproduce vivid colors. Ever since the Venetian painters’ colorism gave way to the art of drawing of the Florentines in the 16th century, the emphasis on reason and ideology of the modern period dominated the world, repressing the expression of colors for a long time. It was an era of black and white. Impressionists, who opposed the ideological tradition, began to express the primary colors perceived by human eyes, tearing down the long-held order of the monochromatic era. Eventually, humanity fully enjoyed the vibrant optical colors in films and broadcasting shows as opposed to faded colors. Newton’s discovery of the spectrum of colors from light could now be directly experienced through display devices.

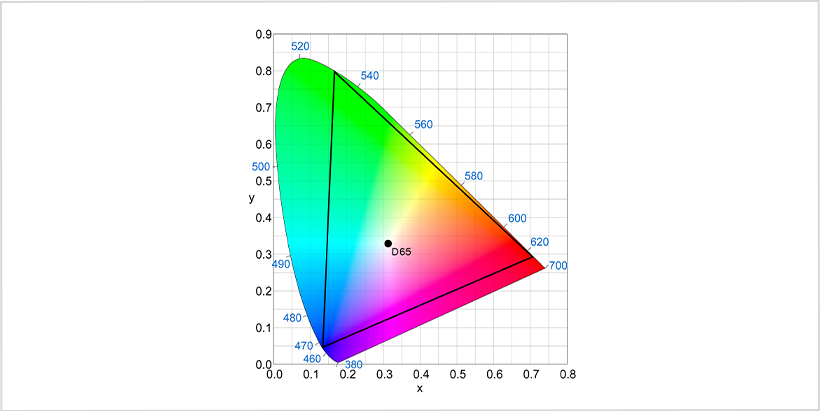

Recently, large display screens have been installed in school classrooms in Korea next to the blackboard. Traditional classrooms used to have a small TV suspended from the ceiling in a corner, but more classrooms today are equipped with huge displays about half the size of the blackboard. Ever since online classes began, it became more commonplace for students to access new information via display devices. A wide variety of displays are available for entertainment and information - whether at home or in the streets. In line with this change, education on colors must also evolve. Color education based on paper and printed materials is undoubtedly needed, but more educational content suitable for today’s display-focused environment needs to be provided. Students should be given opportunities to learn the basic principles of RGB color composition, understand the CIE 1931 color space, and study the properties of the BT.2020 (Rec.2020) color gamut. A proper understanding of the characteristics and differences of the digital color systems is a prerequisite for selecting a good display and generating visually superior content. In order to create a future-ready environment for understanding colors, excellent displays and color content are needed more than anything. Samsung Display’s QD-Display, which recently began its commercialization, offers a more advanced color display to future generations. Unlike LCDs, which require a backlight, QD-Display is self-emissive, which provides near-perfect image quality. Utilizing quantum dots that are self-emissive allows the reproduction of colors with high purity and expands the color gamut to be closer to the BT.2020 standards.

QD-Display also boasts higher color volume and luminance. Such technological enhancements provide brighter and more saturated colors to the users. Simply put, the display offers richer colors. Although many people still choose to watch media content on the small screens of their smartphones, the introduction of displays with high-resolution images elevated the general consumers’ standards for visual devices. Everyone knows that the definition of 4K displays is much higher than that of HD displays. We can never bring ourselves to become more used to inferior image quality, like the haziness of fine dust over the clear blue sky. Once you experience a wide range of rich colors, you can never go back to the world of dull colors as experienced in the past. Therefore, advanced color display technology is not merely about product enhancement but also about heightening human sensory experiences. Human evolution through displays has already begun, and at the core heart of it all stands Samsung Display.